| Written By Seungmin (Helen) Lee for World Politics Review |

In recent years, artificial intelligence has emerged as the most significant driver of global data center demand. Four tech giants—Amazon Web Services, Google, Meta and Microsoft—currently control 42 percent of U.S. data center capacity, and these companies spent $155 billion on AI infrastructure in the first half of 2025. Elsewhere, China is building 30 AI data centers in the deserts of its remote Xinjiang region, and the Dubai-based EDGNEX Data Centers recently announced a $2.3 billion investment into a 144-megawatt AI data center in Indonesia. Overall, global data center demand is expected to triple by 2030, according to a recent McKinsey study.

The scale of these investments underscores AI’s significance not only for economic competitiveness but also for national security, which is driving governments to pursue “sovereign AI”: the ability of a nation to ensure it can develop, govern and use its AI models and supporting infrastructure. Yet, the race to achieve sovereign AI is overlooking critical security vulnerabilities in the very data centers that power the AI models.

For example, President Donald Trump’s AI Action Plan, which was released in July 2025, mandates that federal land be available for AI data center construction, but it also encourages businesses to build AI tech stacks and data centers both domestically and abroad. The motivation behind exporting AI data centers is twofold. Strengthening technological relationships with other nations can help the U.S. become a world leader in technology and digital infrastructure. Separately, there is a business motivation to minimize costs by building data centers in countries with cheaper labor, energy and land, such as Malaysia.

While these motivations are understandable, they lack sufficient attention to critical security concerns. As AI becomes a key component of economic competitiveness and national security, AI data centers hosting the AI models will increasingly become targets for state-sponsored hacking groups. Once these groups have access to AI data centers, they can extract information about AI models and training data to steal intellectual property, build similar models with less resources, create vulnerabilities in AI models and bias their outputs, and access sensitive data.

Moreover, when sourcing components and constructing AI data centers, there is risk of Chinese sabotage operations, as Chinese companies currently dominate manufacturing for much of the necessary hardware, such as transformer substations critical for power systems. These companies can install backdoors into their components, creating an entryway for hackers to penetrate the data centers. While AI-specific hardware is also manufactured by the Taiwan Semiconductor Manufacturing Company, there are strong suspicions that the Chinese Communist Party already has spies within TSMC.

The trend toward building AI data centers abroad opens them up to risk.

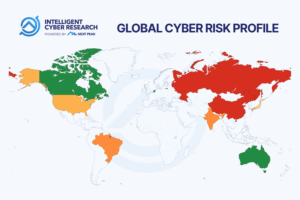

Once AI data centers are operational, sophisticated and well-resourced state hacking groups—most prominently from countries like Russia, China, Iran and North Korea—can pose a serious threat to even the biggest U.S. companies and critical infrastructure. Recent reporting on Chinese state-sponsored hacking group Salt Typhoon—which stole personal data for almost every American, including high-value and protected targets like Trump and Vice President JD Vance—demonstrate the sophistication of such state-sponsored hackers. In 2024, OpenAI published a blog post highlighting the importance of increasing the security of AI data centers and associated infrastructure in order to protect AI from similar attacks.

The trend toward building “offshore” AI data centers only opens them up to further risk, and it isn’t limited to the United States. Last month, South Korean company LG CNS announced its plans to build a $72 million AI data center in Indonesia, and the United Arab Emirates shared its plans to invest up to $59 billion to build Europe’s largest AI data center in France. Earlier in 2025, the U.S. and UAE also revealed a joint plan to build a massive AI data center in Abu Dhabi, projected to be the largest of its kind outside the U.S.

This joint effort should be concerning for U.S. policymakers and national security professionals, because the Persian Gulf is a part of China’s “Digital Silk Road 2.0,” and the UAE has already adopted Chinese 5G technology and city-wide surveillance programs. This means that the Chinese Communist Party can potentially gain access to the future U.S.-UAE AI data center as well.

Cases like these demonstrate that building AI data centers requires careful consideration of cybersecurity and geopolitical risks. Because defending against the most well-resourced and trained threat actors requires significant investments from AI data center companies, national AI policies and plans should incentivize these operators to meet precise technical standards for cybersecurity.

There are several ways to motivate these companies to invest in security, such as tax breaks for operators that implement strong security requirements. Policymakers should also require a certain level of security for AI data centers constructed on federal land and tie security requirements to government contracts for AI data center companies. Once such regulations exist, there will be a distinction between noncompliant and compliant AI data centers, and investors, customers and even potential employees will naturally be attracted to the compliant ones, creating secondary incentives for the data center operators and companies. Looking beyond individual nations, digital solidarity across regions for international or regional cooperation in incentivizing and promoting AI data center security will be critical as companies continue to build abroad.

Despite the importance of national policies that secure AI data centers, U.S. policies still have gaps. Former President Joe Biden took a good first step in January, when he issued an executive order that required AI data center operators to submit security proposals when requesting to build on federal land, but this order was rescinded by Trump in July. In fact, Trump’s subsequent executive orders and policies—including the aforementioned AI Action Plan—do not refer to security requirements for AI data centers unless they are used by the military or the intelligence community.

Other nations are also behind on their AI policies. The European Union’s AI Act does not refer to AI data center security at all, nor does South Korea’s flagship AI law. Globally, policymakers and governments will need to start focusing on the security gap in AI data centers in order to maintain national security and economic competitiveness. After all, sovereign AI means little if the infrastructure that underpins it is left unprotected.

This article was originally posted on September 18th, 2025 to WPR